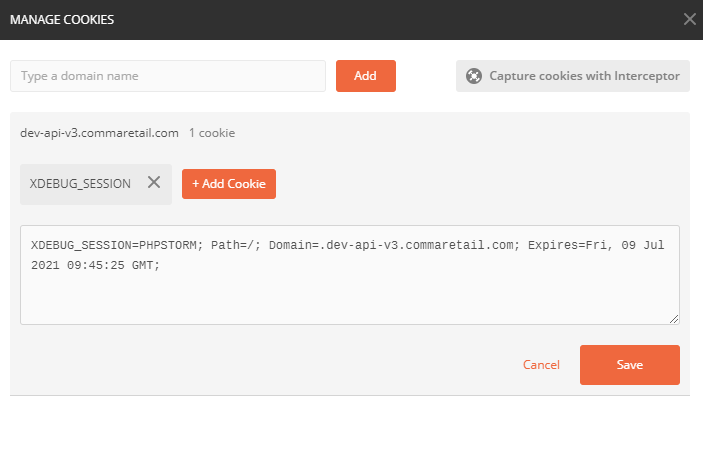

创建XDEBUG的调试COOKIES

分类目录归档:docker

查看容器的启动参数

1.pip install runlike

2.runlike -p 容器ID

root@ubuntu:~# runlike -p 498c3ad49c46

docker run \

--name=nginx \

--hostname=nginx \

--env=PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin \

--env='NGINX_VERSION=1.15.12-1~stretch' \

--env='NJS_VERSION=1.15.12.0.3.1-1~stretch' \

--volume=/data/php/dnmp/nginx/conf.d:/etc/nginx/conf.d:ro \

--volume=/data/php/www:/www:rw \

--volume=/data/php/dnmp/nginx/nginx.conf:/etc/nginx/nginx.conf:ro \

--volume=/etc/nginx/conf.d \

--volume=/etc/nginx/nginx.conf \

--volume=/www \

--network=dnmp_lnmp \

-p 443:443 \

--expose=80 \

--label com.docker.compose.project="dnmp" \

--label com.docker.compose.version="1.18.0" \

--label com.docker.compose.oneoff="False" \

--label com.docker.compose.service="nginx" \

--label com.docker.compose.config-hash="4ad2cd21b7c3c4d0b69776274ea51f5de602a725aa

f3ec1c14c39511051aa904" \

--label maintainer="NGINX Docker Maintainers " \

--label com.docker.compose.container-number="1" \

--detach=true \

nginx:latest \

nginx -g 'daemon off;'

容器清理

1.列举所有容器列表(docker clean)

docker ps -aq

2.停止所有容器

docker stop $(docker ps -aq)

3.删除所有容器

docker rm $(docker ps -aq)

4.清理所有容器

docker system prune -a -f

因为docker版本落后的原故,可能会导致更新版本的docker构造出来的镜像不一定完全兼容旧版本的docker,从而引发挂载目录时内部权限的错误。

移除当前版本:docker-ce版本

rpm -qa | grep docker|xargs yum remove -y

安装最新版本:

curl -fsSL https://get.docker.com/ | sh

miniserver配置

version: '3.1'

services:

mysql:

image: mysql:5.6.40

restart: always

environment:

MYSQL_ROOT_PASSWORD: 123456

# links:

ports:

- 3306:3306

volumes:

- /etc/localtime:/etc/localtime:ro

- /etc/timezone:/etc/timezone:ro

- /opt/mysql/data:/var/lib/mysql

redis:

image: docker.io/redis:latest

restart: always

ports:

- 6379:6379

redis-cluster:

image: grokzen/redis-cluster:5.0.7

restart: always

environment:

STANDALONE: 'true'

IP: '0.0.0.0'

ports:

- '7000-7050:7000-7050'

- '5000-5010:5000-5010'

volumes:

- /etc/localtime:/etc/localtime:ro

- /etc/timezone:/etc/timezone:ro

prometheus:

image: prom/prometheus:v2.15.2

restart: always

ports:

- 9090:9090

node的typescript的常用操作

1.安装node版本,建议选择v8.11.3版本。

https://nodejs.org/dist/v8.11.3/node-v8.11.3-x64.msi2.安装visual studio code,选择最新版。

【npm的所有命令,请不要powershell中执行,目前还不知道为什么在本人环境发现在执行npm config set注册下载源时,会出错,且错得很离谱。故需要用cmd.exe来解决。】

3.安装全局组件。

npm install -g typescript

npm install -g pm2

npm install -g ts-node

4.安装本地组件

npm install

5.如果觉得安装有问题,可以直接清理npm缓存及组件。

%appdata%/npm和%appdata%/npm

woweb的yii2容器化服务部署

version: '3.1'

networks:

default:

driver: bridge

driver_opts:

com.docker.network.enable_ipv6: "false"

ipam:

driver: default

config:

- subnet: 192.168.57.0/24

services:

mysql:

image: mysql:5.5.60

restart: always

environment:

MYSQL_ROOT_PASSWORD: abc3.0123

# links:

ports:

- 13306:3306

# 13306端口是补人使用的,不能随便修改。

volumes:

- ./data/share/localtime:/etc/localtime:ro

- ./data/share/timezone:/etc/timezone:ro

- ./data/mysql/data:/var/lib/mysql

php:

image: yiisoftware/yii2-php:7.2-fpm

restart: always

ports:

- 9900:9000

links:

- mysql:mysql

extra_hosts:

- mysql.woterm.com:abc.24.129.221

depends_on:

- mysql

volumes:

- ./data/share/localtime:/etc/localtime:ro

- ./data/share/timezone:/etc/timezone:ro

- ./data/wwwroot:/home/wwwroot

- ./data/wwwlogs:/home/wwwlogs

- ./../../woweb:/home/wwwroot/woweb

# php-fpm运行的用户为www-data,需要将wwwroot的权限为[chmod a+w ]

nginx:

image: nginx:1.13.6

restart: always

ports:

- 80:80

links:

- mysql

- php

depends_on:

- mysql

- php

volumes:

- ./data/share/localtime:/etc/localtime:ro

- ./data/share/timezone:/etc/timezone:ro

- ./data/nginx/conf/nginx.conf:/etc/nginx/nginx.conf:ro

- ./data/nginx/conf/vhost:/etc/nginx/vhost:ro

- ./data/wwwroot:/home/wwwroot

- ./data/wwwlogs:/home/wwwlogs

- ./../../woweb:/home/wwwroot/woweb

ftp:

image: stilliard/pure-ftpd

restart: always

ports:

- "21:21"

volumes:

- ./data/vsftp:/home/vsftp

environment:

FTP_USER_NAME: uftp

FTP_USER_PASS: xxxxxxx

FTP_USER_HOME: ./data/vsftp/home

Dockerfile的CMD和ENTRYPOINT的关系

以下示范,表示该形式下的CMD与ENTRYPOINT的关系。

CMD相当于应用程序的参数,ENTRYPOINT相当于应用的main入口或主程序入口。

FROM centos

CMD ["echo 'p222 in cmd'"] #传递给ENTRYPOINT的参数项。

ENTRYPOINT ["echo"] #应用入口,相当于程序的main函数

1.构建

docker build -t test .

2.执行以下指令执行默认的CMD命令。

docker run test

输出结果:

echo 'p222 in cmd'

3.修改程序输入参数

docker run test abct123

输出结果:

abct123

kafka容器化部署

1.参考https://github.com/wurstmeister/kafka-docker的实现。

2.参考https://github.com/simplesteph/kafka-stack-docker-compose

3.基于上述两个参考,实现以下的部署文件。

version: '3.1'

services:

zoo1:

image: zookeeper:3.4.9

hostname: zoo1

ports:

- "2181:2181"

environment:

ZOO_MY_ID: 1

ZOO_PORT: 2181

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

volumes:

- ./zk-multiple-kafka-multiple/zoo1/data:/data

- ./zk-multiple-kafka-multiple/zoo1/datalog:/datalog

zoo2:

image: zookeeper:3.4.9

hostname: zoo2

ports:

- "2182:2182"

environment:

ZOO_MY_ID: 2

ZOO_PORT: 2182

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

volumes:

- ./zk-multiple-kafka-multiple/zoo2/data:/data

- ./zk-multiple-kafka-multiple/zoo2/datalog:/datalog

zoo3:

image: zookeeper:3.4.9

hostname: zoo3

ports:

- "2183:2183"

environment:

ZOO_MY_ID: 3

ZOO_PORT: 2183

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

volumes:

- ./zk-multiple-kafka-multiple/zoo3/data:/data

- ./zk-multiple-kafka-multiple/zoo3/datalog:/datalog

kafka1:

image: wurstmeister/kafka:2.12-2.0.1

container_name: kafka1

hostname: kafka1

ports:

- "9092:9092"

- "1099:1099"

environment:

KAFKA_ZOOKEEPER_CONNECT: "zoo1:2181,zoo2:2182,zoo3:2183"

KAFKA_BROKER_ID: 1

KAFKA_LOG4J_LOGGERS: "kafka.controller=INFO,kafka.producer.async.DefaultEventHandler=INFO,state.change.logger=INFO"

KAFKA_AUTO_CREATE_TOPICS_ENABLE: "true"

KAFKA_LISTENERS: PLAINTEXT://:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.10.100:9092

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3

KAFKA_DEFAULT_REPLICATION_FACTOR: 3

KAFKA_JMX_OPTS: "-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=127.0.0.1 -Dcom.sun.management.jmxremote.rmi.port=1099"

JMX_PORT: 1099

volumes:

- ./zk-multiple-kafka-multiple/kafka1:/kafka

- /var/run/docker.sock:/var/run/docker.sock

depends_on:

- zoo1

- zoo2

- zoo3

kafka2:

image: wurstmeister/kafka:2.12-2.0.1

container_name: kafka2

hostname: kafka2

ports:

- "9093:9092"

- "2099:1099"

environment:

KAFKA_ZOOKEEPER_CONNECT: "zoo1:2181,zoo2:2182,zoo3:2183"

KAFKA_BROKER_ID: 2

KAFKA_LOG4J_LOGGERS: "kafka.controller=INFO,kafka.producer.async.DefaultEventHandler=INFO,state.change.logger=INFO"

KAFKA_AUTO_CREATE_TOPICS_ENABLE: "true"

KAFKA_LISTENERS: PLAINTEXT://:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.10.100:9093

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3

KAFKA_DEFAULT_REPLICATION_FACTOR: 3

KAFKA_JMX_OPTS: "-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=127.0.0.1 -Dcom.sun.management.jmxremote.rmi.port=1099"

JMX_PORT: 1099

volumes:

- ./zk-multiple-kafka-multiple/kafka2:/kafka

- /var/run/docker.sock:/var/run/docker.sock

depends_on:

- zoo1

- zoo2

- zoo3

kafka3:

image: wurstmeister/kafka:2.12-2.0.1

container_name: kafka3

hostname: kafka3

ports:

- "9094:9092"

- "3099:1099"

environment:

KAFKA_ZOOKEEPER_CONNECT: "zoo1:2181,zoo2:2182,zoo3:2183"

KAFKA_BROKER_ID: 3

KAFKA_LOG4J_LOGGERS: "kafka.controller=INFO,kafka.producer.async.DefaultEventHandler=INFO,state.change.logger=INFO"

KAFKA_AUTO_CREATE_TOPICS_ENABLE: "true"

KAFKA_LISTENERS: PLAINTEXT://:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.10.100:9094

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3

KAFKA_DEFAULT_REPLICATION_FACTOR: 3

KAFKA_JMX_OPTS: "-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=127.0.0.1 -Dcom.sun.management.jmxremote.rmi.port=1099"

JMX_PORT: 1099

volumes:

- ./zk-multiple-kafka-multiple/kafka3:/kafka

- /var/run/docker.sock:/var/run/docker.sock

depends_on:

- zoo1

- zoo2

- zoo3

manager:

image: hlebalbau/kafka-manager:2.0.0.2

hostname: manager

ports:

- "9000:9000"

environment:

ZK_HOSTS: "zoo1:2181,zoo2:2182,zoo3:2183"

APPLICATION_SECRET: "random-secret"

KAFKA_MANAGER_AUTH_ENABLED: "true"

KAFKA_MANAGER_USERNAME: "abc"

KAFKA_MANAGER_PASSWORD: "123"

command: -Dpidfile.path=/dev/null

4.测试文件

基于https://github.com/segmentio/kafka-go库的示范,实现如下:

package kaf

import (

"context"

"fmt"

"github.com/segmentio/kafka-go"

"log"

"time"

)

func LeaderProduce() {

topic := "my-topic"

partition := 0

conn, err := kafka.DialLeader(context.Background(), "tcp", "localhost:9092", topic, partition)

if err != nil {

log.Fatal(err)

}

conn.SetWriteDeadline(time.Now().Add(10 * time.Second))

conn.WriteMessages(

kafka.Message{Value: []byte(fmt.Sprint("one!", time.Now()))},

kafka.Message{Value: []byte(fmt.Sprint("two!", time.Now()))},

kafka.Message{Value: []byte(fmt.Sprint("three!", time.Now()))},

)

conn.Close()

}

func LeaderConsumer() {

topic := "my-topic"

partition := 0

conn, _ := kafka.DialLeader(context.Background(), "tcp", "localhost:9092", topic, partition)

conn.SetReadDeadline(time.Now().Add(10 * time.Second))

batch := conn.ReadBatch(10e3, 1e6) // fetch 10KB min, 1MB max

for {

msg, err := batch.ReadMessage()

if err != nil {

break

}

fmt.Println(string(msg.Value))

}

batch.Close()

conn.Close()

}

func ClusterProduce(port int) {

// make a writer that produces to topic-A, using the least-bytes distribution

w := kafka.NewWriter(kafka.WriterConfig{

Brokers: []string{"localhost:9092", "localhost:9093", "localhost:9094"},

Topic: "topic-A",

Balancer: &kafka.LeastBytes{},

})

err := w.WriteMessages(context.Background(),

kafka.Message{

Key: []byte("Key-A"),

Value: []byte(fmt.Sprint("Hello World!", time.Now())),

},

kafka.Message{

Key: []byte("Key-B"),

Value: []byte(fmt.Sprint("One!", time.Now())),

},

)

if err != nil {

fmt.Println(port, "error", err)

}

w.Close()

}

func clusterConsume(port int) {

// make a new reader that consumes from topic-A

r := kafka.NewReader(kafka.ReaderConfig{

Brokers: []string{"localhost:9092", "localhost:9093", "localhost:9094"},

GroupID: "consumer-group-id",

Topic: "topic-A",

MinBytes: 1024 * 10, // 10KB

MaxBytes: 10e6, // 10MB

})

for {

m, err := r.ReadMessage(context.Background())

if err != nil {

fmt.Println(port, "error.....", err)

time.Sleep(time.Second * 10)

continue

}

fmt.Printf("%v--message at topic/partition/offset %v/%v/%v: %s = %s\n", port, m.Topic, m.Partition, m.Offset, string(m.Key), string(m.Value))

// time.Sleep(time.Second)

}

r.Close()

}

ZooKeeper的容器化配置

docker pull zookeeper

https://github.com/getwingm/kafka-stack-docker-compose

version: '3.1'

services:

zoo1:

image: zookeeper

restart: always

hostname: zoo1

ports:

- 2181:2181

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

zoo2:

image: zookeeper

restart: always

hostname: zoo2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zoo3:2888:3888;2181

zoo3:

image: zookeeper

restart: always

hostname: zoo3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181

sparksql的操作实践

KMR

1.登录KMR

2.切换致spark帐号【su – spark】

3.进入spark-shell的命令行操作界面

spark-shell --master=yarn

4.常见命令如下

spark.sql("create external table bhabc(`userid` bigint,`id` int,`date` string,`count` bigint,`opcnt` int,`start` int,`end` int) partitioned by (dt string) row format delimited fields terminated by ',' stored as sequencefile location '/data/behavior/bh_abc_dev'").show

spark.sql("show tables").show

spark.sql("show databases").show

spark.sql("show tables").show

spark.sql("show partitions bhwps").show

spark.sql("alter table bhwps add partition(dt='2019-05-21')").show

spark.sql("select * from bhwps where dt between '2019-05-15' and '2019-05-31' order by `count` desc").show

spark.sql("alter table bhwps add partition(dt='2019-06-22') partition(dt='2019-06-23')").show增加多个分区

spark.sql("msck repair table bhwps").show 修复分区就是重新同步hdfs上的分区信息。

spark.sql("show partitions bhraw").show(100,false) 可以显示超过20个记录。

5.常见问题:

》目录权限问题

可以用hdfs dfs -chown -r /path来修改目录权限。

清理垃圾桶

hdfs dfs -expunge